Breast Cancer Detection

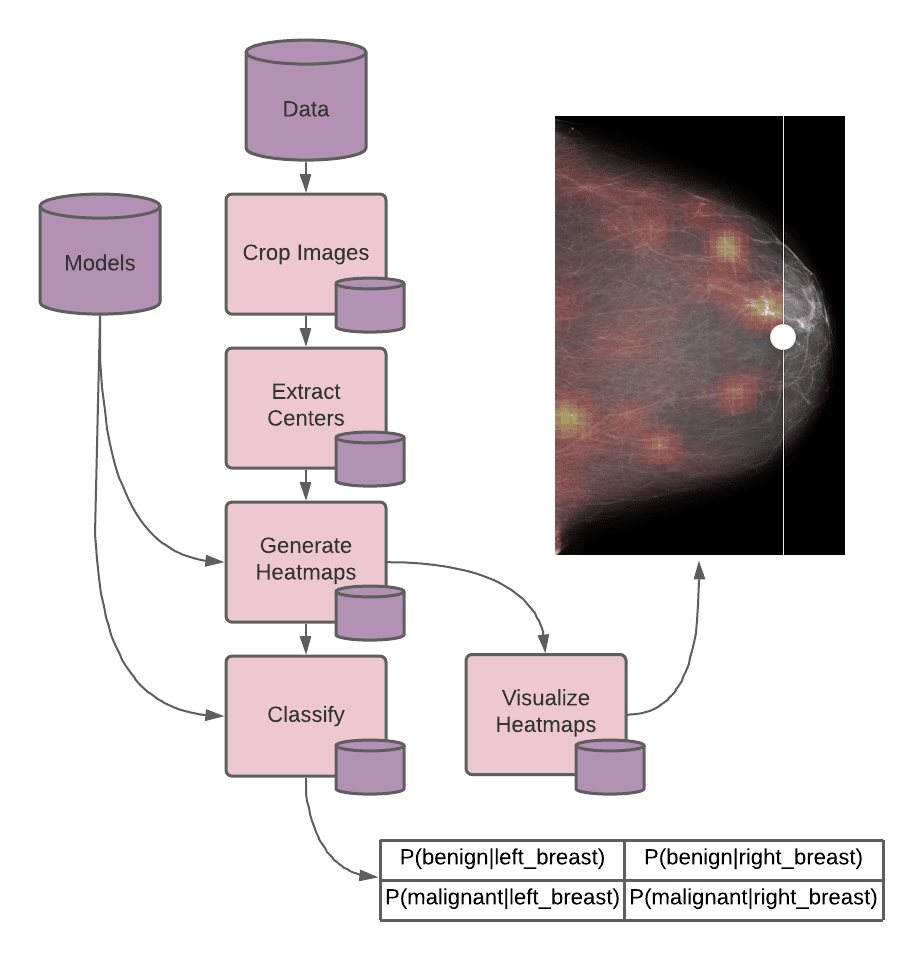

In the example below we show how to create a scalable pipeline for breast cancer detection.

There are different ways to scale inference pipelines with deep learning models. We implement two methods here with Pachyderm: data parallelism and task parallelism.

- In data parallelism, we split the data, in our case breast exams, to be processed independently in separate processing jobs.

- In task parallelism, we separate out the CPU-based preprocessing and GPU-related tasks, saving us cloud costs when scaling.