Data lineage uncovers the life cycle of data. It aims to show the complete flow of data from start to finish. By understanding, recording, and visualizing data as it flows from data sources to consumption, it makes the movement of that data clear.

This allows you to track and trace data from the original source to its final destination. How did it change? What changed? When? Why? Where did it branch off and how did that affect the model output?

TL;DR: Data Lineage in a few words

You can think of data lineage as similar to the concept of “audit trails”. In accounting, an audit trail is a record that shows changes made to financial accounts over time.

In data governance, the term “data trail” refers to a record that shows changes made to data over time. The goal of applying data lineage is not only for compliance with industry regulations, but also for better understanding how business decisions are made or how machine learning models perform based on the use of certain datasets (e.g. which customers are targeted for marketing, which products are sold to which customers).

What is Data Lineage? (the Long Version)

Data lineage is a key component of data governance, providing transparency and accountability. It can be applied to any kind of data, including structured, unstructured, and semi-structured.

It doesn’t matter if it’s high resolution satellite imagery, or 4K video files for film and streaming television (all unstructured data), JSON and YAML files (semi-structured), or the rows and columns in a spreadsheet or database (structured), a good data lineage system will help you understand where that data came from and how it changed over time. It will also keep immutable copies of all changes, so you can always roll back to any earlier version of the data at any given point in time.

Without immutability, you can lose the essential ability to go back to the original version or an earlier modification of the data, model or code. If you can’t go back then it doesn’t matter if you recorded that change, you must be able to return to that change without fail. This is an essential component of reproducibility. Data lineage can be applied at any level of granularity, depending on how you define a datum for your model—from an individual record or document to a company’s entire database or object store.

What Data Lineage Is Not

Data lineage is sometimes confused with or used interchangeably with the concepts of data provenance, data provenance analysis, and data provenance management. These are different in many ways from data lineage, although all three share the aim of better understanding how a certain dataset is created or transformed into another.

How Data Provenance Differs from Data Lineage

The short version is that data provenance refers to a set of metadata that describes how a given dataset has been created. Data provenance is usually associated with datasets that have been derived from other datasets through processes such as sampling, aggregation, descriptions, and computations. Data provenance can be used to understand how the quality of a dataset changes over time (e.g., how an aggregated report becomes less accurate when additional data is added over time). It can also show how the ontology (the conceptual structure) and semantics of a dataset change over time (e.g. as new fields get added).

In practice, the concepts are overlapping and share a lot in how you understand the journey of your data over time. In order to understand the data lineage of a particular job, data scientists must understand the data flow. Using a system that easily elucidates the data flow of a job is a key step in debugging a pipeline and understanding shifts in model performance.

How to Implement Data Lineage

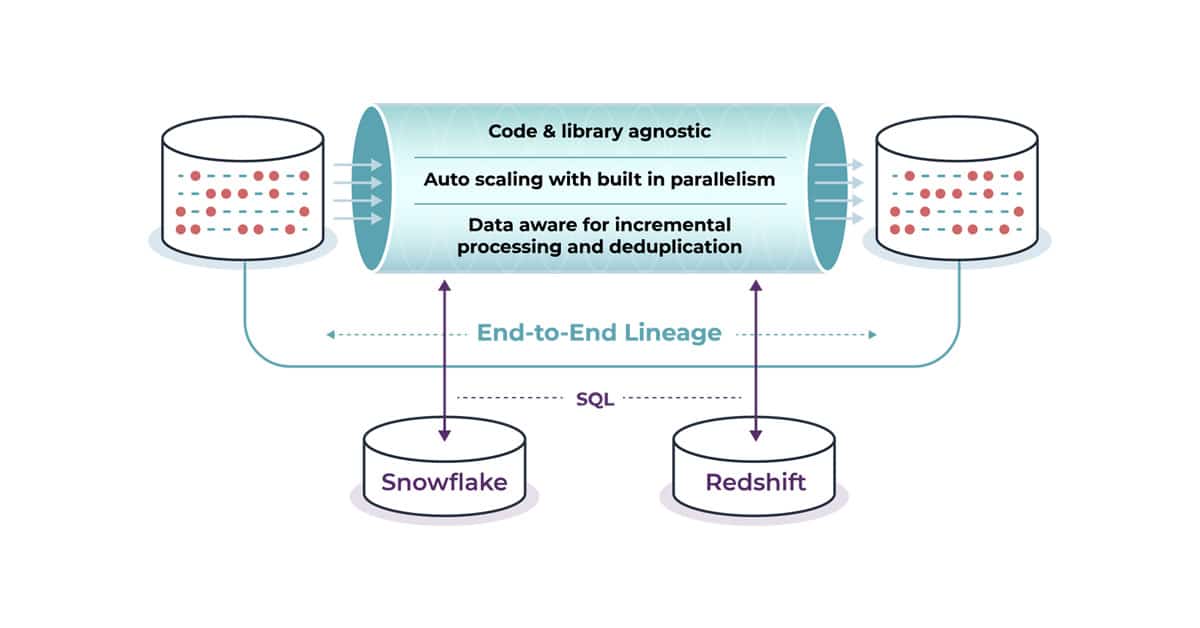

A good system for machine learning data lineage tracking will help you swiftly determine whether your data, your pipeline, or your code changed or whether they changed all at the same time. Understanding that complex interplay between code, data and pipeline helps you understand how that model performs in production. Identifying what changed in the pipeline is known as impact analysis. For example, a data engineer may be asked to add a new column to a table. This will result in rearranging the columns in the table or compressing image or audio files. How do those changes affect the model’s performance? Is it drifting? Is it showing increased anomalies? Has its accuracy fallen off slowly or dramatically?

Going back and tracing where things changed helps you get good answers to those questions and upgrade your model to get it back to performing at its best fast. Data lineage is the key to having a better understanding of your models and to ensuring they work accurately in the real world. Without it, you’re flying blind and your models are flying blind too.

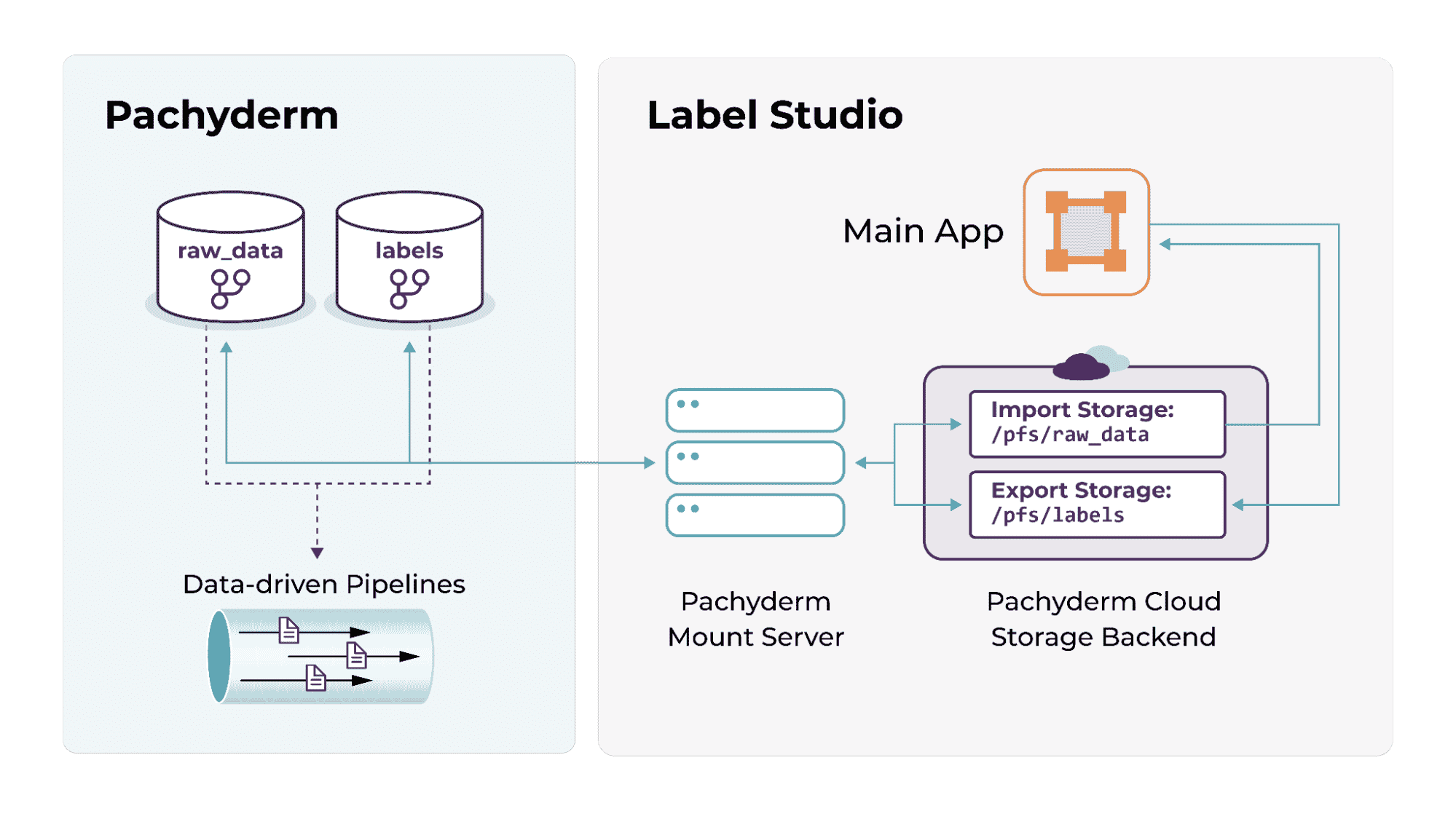

Pachyderm’s datum-driven pipelines are built to automate your data lineage without requiring a separate metadata store for structured and unstructured data alike. Data lineage is an essential part of the Machine Learning Loop. Get Pachyderm’s ebook, “Completing the Machine Learning Loop” today.