The Early Days of ML/AI Tools: DIY

Just a few years ago, if you wanted to do AI/ML you needed to roll your own stack from scratch.

AI pioneers like Google, Lyft, Uber, Tesla, and Amazon all hired cutting edge engineering teams to solve the problems of bringing AI/ML out of the labs and universities and into the messy real world. Teams like Google built entire bespoke infrastructures and even custom chips to support a single app, like Google translate.

The failure rate for many of those early solutions was super high, especially as they tried to generalize their infrastructure solutions to novel machine learning tasks. It wasn’t uncommon for the big tech players to abandon their early projects and start fresh as they tried to solve unexpected new problems in the fast changing world of AI.

The First Attempts at Standardizing MLOps Tools

The team at Uber built Michelangelo with the idea that it would be the ultimate stack for all of their emerging machine learning needs back in 2016-2017. Turns out it was mostly good for the few use cases they built it for but it didn’t generalize all that well to other machine learning needs. The project later splintered into multiple projects inside the company and some of the original creators went on to start their own company, most notably Tecton, from what they learned.

But as AI/ML has gotten better and better at solving real world problems, we’ve seen an incredible explosion of new companies and projects looking to build a high performance, generalized machine learning infrastructure stack that will work for just about any ML project you can throw at it.

All-In-One Machine Learning Platform Valuations Are On the Rise

There’s also a surge of big money pouring into the space right now, with companies like SnorkelAI raking in $85 million and posting a blockbuster billion dollar valuation. But the record for the biggest cash infusion so far goes to DataBricks, which pulled in a whopping $1.6 billion dollars, pushing the company’s valuation to $38 billion dollars. The founders of DataBricks built the venerable Spark project, tuned for crunching big data. Big data was a buzzword back in 2008 and it spawned the creation of two companies with their own billion dollar valuations, Cloudera and Hortonworks, which eventually merged.

Since then we’ve seen DataBricks and Spark pivot their focus to AI workloads and mostly dropped the big data moniker from their marketing.

But is a platform built for big data really the machine learning platform we’re all waiting for or do we need something else to accelerate the rapidly emerging machine learning revolution?

To answer that it helps to know a little about how Spark got to be where it is today.

Big Data and Big Dreams: Building the Infrastructure for ML/AI

When we think of big data, we can’t help but think of pioneering tech powerhouse Google. Its very name comes from the number googol, which is a mind-boggling 1 plus 100 zeroes. From the very beginning the company saw itself as the organizers of all the world’s data and they were one of the first companies to wrestle with truly “big data” before it was a Silicon Valley buzzword.

As Google grew in size, it quickly became clear that they couldn’t deal with the massive amounts of information pouring into their systems with traditional databases or file systems. They needed a new parallel architecture and that led them to create the Google File System (GFS), MapReduce (MR), and BigTable. The GFS provided a distributed file system spread across clusters of commodity hardware. BigTable delivered scalable storage for structured data, while MR introduced a new programming model, which filtered, sorted and summarized the data stored in GFS and BigTable.

Hadoop Emerges to Scale DataOps

Other web companies were struggling with the same problems as the Internet took off and got bigger and bigger with each passing day. Yahoo engineers read the original Google papers and it inspired the Hadoop file system, which the team open sourced. Hadoop garnered a large open source community and they were the reason for Cloudera and Hortonworks posting big valuations as they promised to commercialize Hadoop and the market expected more and more companies to face a growing surge of data.

But Hadoop had some big problems.

Its clusters were notoriously difficult to set up and manage. Its fault tolerance was brittle and prone to breaking down. Its MapReduce API was cumbersome and verbose and required a ton of boilerplate code for even simple tasks. Even worse, it had serious performance issues as it wrote and fetched everything from the slowest component in all of computing: the hard disk. That was well before SSDs became solid and reliable and spinning disks were notoriously slow. Large MR jobs could run for hours or days and crash out because of errors with obscure problems.

New Approaches to Big Data Processing

Matei Zaharia, the Chief Technologist, and Databricks cofounder, started working on a replacement in 2009 while at Stanford but the code really started to take shape in 2012, with the first official release of Spark coming in 2014.

Spark had a number of key improvements over Hadoop. It improved on the big limitations of the MapReduce paradigm, which forced an unnatural linearity. Even worse, Hadoop spent way too much time reading and writing everything to those slow spinning disks again and again, which took a punishing toll on speed. Spark moved to do more of the operations in memory and early papers showed it was 10-20X faster for certain jobs and today it’s considered up to 100X faster for certain workloads, benefitting from the in-memory operations and the rise of solid state disks which cut down one of the worst bottlenecks in high performance computing.

Hadoop also suffered from a series of sub-projects that addressed its limitations but made it increasingly difficult to run in production, like Apache Hive, Apache Storm, Apache Giraph, Apache Impala, and more. Each of them had their own APIs and cluster configurations and with each new sub-project managing Hadoop got harder and harder. Spark benefitted from the 20-20 hindsight of all these projects and the team worked to unify all those design patterns into a single engine for distributed data processing.

Since then, Spark has gained a wide following for business analytics and big data processing and increasingly it’s being used in AI and it’s seen as an AI engine.

But is Spark really the AI engine we’re looking for?

The Digital Divide Redux

Bruce Sterling once wrote that computers changed science fiction and created a massive digital divide between sci-fi before and after the invention of PCs. Pre-computer sci-fi would forever be confined to the analog world of ink and wood pulp and its predictions would never seem as relevant since computers redefined every advanced technology on the planet.

In the same way, when we look back at the history of Spark, we find a system that started development well before AlexNet, the massive breakthrough that spawned the deep learning revolution and the modern surge of machine learning tools. AlexNet is the turning point that catapulted AI into the modern era. It was one of the first times we could solve a problem with AI that we simply couldn’t solve with traditional hand coded logic.

When you have code written before one of the biggest breakthroughs in AI history, it’s hard to retrofit the needs of that new kind of software to an older architecture.

Even more, Hadoop and Spark were crafted to handle big data, a problem the FAANG and a few other large companies in the world face. Yet despite the big data buzz a few years back, it turns out that most companies just weren’t dealing with that scale of data outside of the internet industry. While every company in the world has seen an increase in data across their systems, few of them had new bits surging into the databases and disks every millisecond of every day.

It’s a Mistake to See ML as Nothing but a Big Data Problem

Machine Learning isn’t so much about big data, as it’s about having the right data.

As Andrew Ng said in his talk on moving from model centric to data centric, getting better performance might be as simple as focusing on better data labeling. While new breakthrough algorithms get the most press, Ng often found that switching up a transformer from Bert, to XLNet or RoBERTa had only a marginal improvement on performance, maybe .02%. But when they went back and adjusted their instructions to people so those folks set the bounding boxes more consistently, they wound up with 20% better performance.

Still, sometimes bigger is better.

OpenAI spent years tuning their DOTA 2 playing bot to take on the best players in the world.

They eventually built a reinforcement learning powerhouse that destroyed players at the highest levels with a 98% win rate by simulating tens of thousands of years of play. The system “plays 180 years’ worth of games every day — 80% against itself and 20% against past selves — on 256 Nvidia Tesla P100 graphics cards and 128,000 processor cores on Google’s Cloud Platform” costing millions of dollars just to train.

Yet as industry veteran Francois Chollet, author of Keras, wryly noted on the Lex Friedman podcast, if you have a big enough dataset, any AI solution is nothing more than a brute force pattern recognition problem. That’s not to take anything away from OpenAI’s tremendous success but simulating 10,000 years of games, is not all that different from DeepBlue beating Gary Kasperov. When DeepBlue beat Gary Kasperov at chess, lots of folks thought we were on the verge of a revolution in AI. After all, some of the smartest people in the world play chess, so teaching a machine to play chess with the great grandmasters must mean we’re just around the corner to unlocking the great mysteries of the mind.

It turns out, DeepBlue was mostly just a brute force tree search.

Once we had enough compute power to simulate through all the possibilities of chess, we could crack it, without a heck of a lot of higher intelligence behind it at all. Just as DeepBlue taught us that solving chess wasn’t the key to unlocking the entire mysteries of the human mind, OpenAI’s DOTA taught us that you can solve a lot of AI problems with brute force and compute power but it doesn’t mean all that much for the average AI solution which works with a lot less data and compute.

While a huge dataset of x-ray slides of skin cancer can help your algorithms get better at detection, it’s better to have a series of clear, diverse, well labeled slides as well as slides of normal skin to feed your learning systems.

Unstructured Data – The Missing Killer Feature for Data-Centric AI

There’s one more thing missing from most of today’s solutions and it’s a big one. More and more of today’s most cutting edge ML applications are unstructured.

Whether that’s crunching through massive video files for film and television to process special effects, or doing dialogue detection so you know where to place an ad in the video without cutting someone off mid-sentence, or whether that’s churning through high resolution satellite images that can hit 300 to 500GB a piece, or whether that’s dealing with big waves of genetic data in biotech, or working with large audio files for translation and music, the most cutting edge machine learning deals with the messy, unstructured world humans navigate so easily.

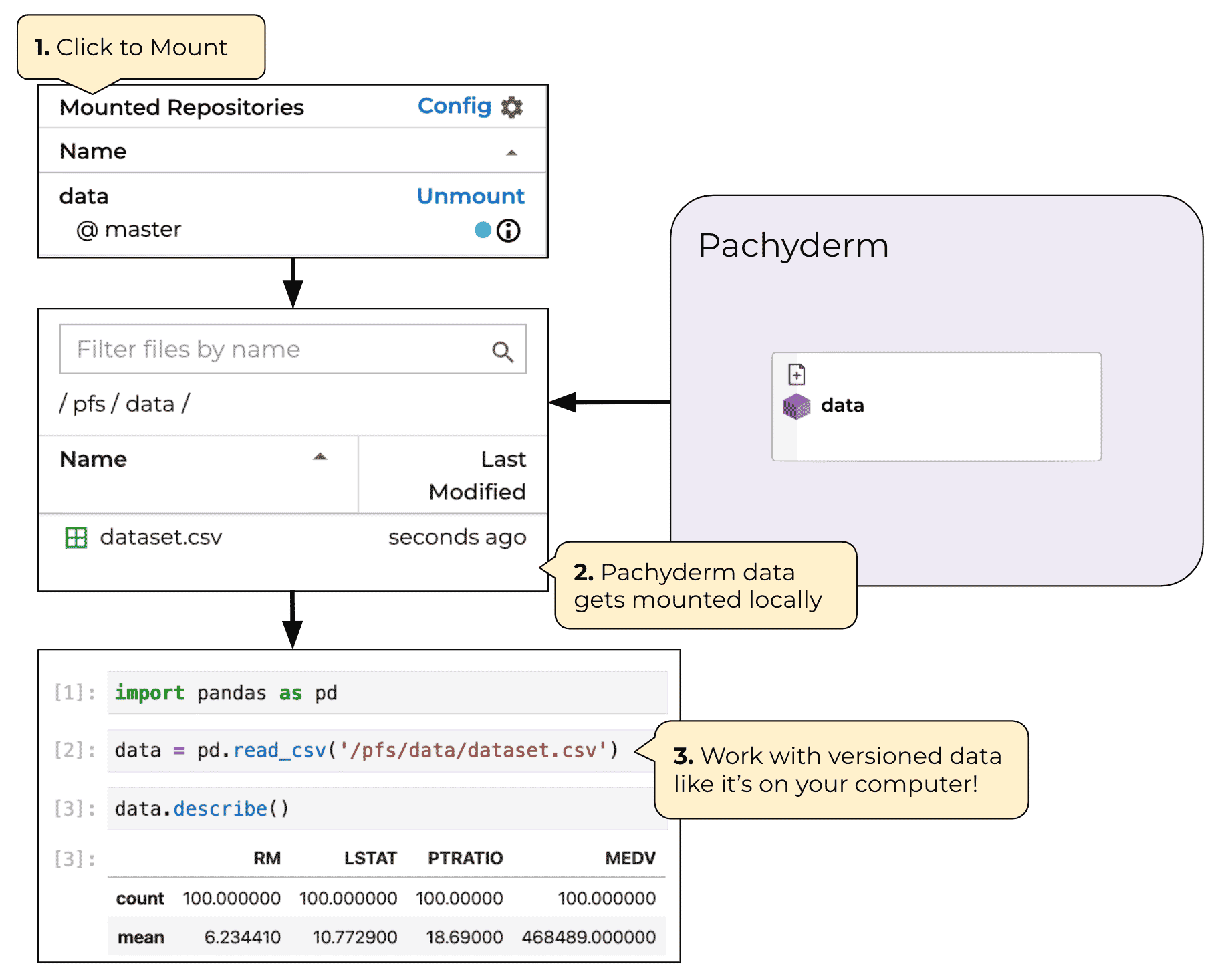

For years, AI struggled with audio, video, imagery, unstructured text and genomics data. But now, finally, we have systems like Pachyderm that make working with unstructured data a key focus of the platform.

More than 80% of the data in enterprises is unstructured and yet 80% of the tools seem to focus on structured data.

Why?

Because we understand it well. It was the dominant design pattern in computing history. The rise of big databases and highly structured text made the web what it is today. We understand how to deal with structured data but we’re just beginning to understand unstructured data.

Most of the systems, including Spark, are laser focused on structured data. DataBricks uses a database backend, with parquet files, on the lakehouse architecture. Despite a lot of headlines and a huge marketing surge that touts Delta Lake’s capabilities with unstructured data, the documentation just doesn’t back it up. It’s really only documented to work with structured and semi-structured data. DataBricks only has one publicly available demo of how to do image processing on the platform.

The Future of Machine Learning Must Focus on All Data

Where are the big audio and video files? Where are the massive, high resolution images?

The reason those examples are missing in action is simple. They don’t work.

Audio, video and giant genetics sequences don’t fit well into a database structure.

Try shoving 4K movie clips into paraquet files and see what happens.

If you try to shoehorn big, binary data into databases it just doesn’t scale or perform well. You need a flat file system structure to deal with it, so you can work with those files directly. That’s why the tools that will come to dominate the MLOps landscape in the coming decade will make unstructured data a first class citizen, but we can’t get there by retrofitting old architectures to the needs of tomorrow’s software.

We need tools born in the data-centric world of modern AI and deep learning, tools born after AlexNet, not tools retrofitted with a fresh coat of marketing paint.

The One-Stop Shop Problem for ML Platforms

There’s one last big problem with Spark.

They’ve got to port a lot of ML tools and it’s hard to keep up.

The AI/ML ecosystem is overflowing with cutting edge frameworks. Whether it’s pytorch and mxnet or tensorflow, or Anaconda, or HuggingFace releasing their incredible set of pre-trained models, or an obscure NLP library that just got released last week, it takes time to port those frameworks to work on an old system, especially one like Spark that’s accessed through highly structured programming techniques, versus the natural and fluid nature of modern code that prefers direct access.

DataBricks has to port all of these fast moving tools to a set of libraries to offer customers and there’s simply no way to keep up with the rich ecosystem that’s out there.

Breaking MLOps Out of the Walled Garden

It’s much better to have a tool that’s code agnostic, where you can package up any code you want into a container and run it directly against your dataset. You don’t have to wait for an army of paid programmers to painstakingly port it because porting simply doesn’t scale. You don’t want to wait for a company to try and curate a vast and rich ecosystem. You want to pick and choose yourself because your provider may not have the most up to date versions or have the software you want at all.

Yahoo tried that in the early days of the web. They employed a small army of people to read and curate the web. That worked fine when the web was small and manageable but that strategy quickly fell apart as the web surged in popularity and grew exponentially. It’s why Google’s more automated methods of indexing the web quickly overtook them and why they dominate search today.

The system we need in machine learning is a system that easily and effortlessly supports the endless array of tooling without waiting for the maintainers of a single project to port that tool over.

The Machine Learning Tools of Tomorrow

The future of MLOps needs an abstract factory for ML, the way Kubernetes is an abstract factory for any web app you can throw at it.

Kubeflow was supposed to be that but it’s basically just another python pipelining engine that has to support every single language by hand. We need a tool that can easily handle R, Java, C/C++, Rust or whatever a data scientist wants to use, without waiting for the team to support it. The tool should allow you to use any language you want right now.

What About Today’s MLOps Platforms?

And while a lot of software platforms out there claim to have an end to end solution, there is no end to end solution for AI/ML and there won’t be for years. The only place an end to end solution exists is in the mind of the marketing teams behind DataBricks, Amazon’s Sagemaker and Google’s Vertex, not to mention just about every small to midsize player in the space.

If you believe the marketing hype, those tools solve every problem in AI/ML and also slice bread, pick up your kids after school and remind you to call your mom on her birthday.

While those tools are fantastic at certain kinds of machine learning solutions, like churn prediction, recommendation engines and log analysis, they start to fall very badly short when it comes to the most cutting edge applications in AI.

Your MLOps Use Case Drives Your Tool Choice

If you want to crunch through entire DNA sequences to find the latest drugs, or if you want to chop up a 1 TB high resolution satellite image into chunks to track ships surging across the ocean, or you want to iterate over a million 3D lung scans looking for cancer, or you want to process big videos to do automatic lip syncing in movies, or you want to generate reams of synthetic data to augment your health data, you’re out of luck. You’ll need another platform or multiple platforms.

The most advanced teams will need more than one tool. It’s as simple as that. They’ll need tools that create incredibly accurate synthetic data, or that provide a best-in-class feature store, or a versioned data lake with lineage, or advanced auditing and reporting so they can prove their algorithms aren’t biased to auditors who aren’t data scientists. Those teams will have a collection of software stacked together to solve these highly advanced needs. They’ll have some core platforms and they’ll have some of their own hand-crafted solutions too but they won’t have a single tool to rule them all.

Until then we’ll need a suite of best-of-breed tools, coming out of the rapid evolution of the canonical stack for machine learning over the next 5-10 years.

The next-gen stack will help accelerate AI out of the research labs and the halls of big tech, and into companies and individual practitioners of all shapes and sizes. It will lead to an explosion of incredible new applications that touch every aspect of our lives.

But first we have to leap from the last stage of AI to the next one.

We can’t build the apps of tomorrow on the platforms of yesterday.