While AI technologies are becoming more and more integrated into all areas of our lives, organizations need to implement the same level of reliability that exists for traditional software development practices. Data versioning is one of the critical components to build a robust AI development workflow. With reproducible pipelines and integration with leading data science tools, such as JupyterHub and Kubeflow, Pachyderm is just the solution many data scientists are looking for.

In this presentation, Svetlana Karslioglu, a Senior Technical Writer at Pachyderm, talks about reproducibility and data versioning and how not tracking your data might contribute to data science project failures when seemingly everything goes right. Bias can sneak into most reliable datasets and produce misleading results that can impact lives of many people.

A bit of housekeeping: This talk references Pachyderm Hub, which we stopped offering in February 2022. You can instead install Pachyderm Community and try our library of examples

Talk Transcript: Building More Equitable Datasets with Pachyderm

Hello, everyone. My name is Svetlana Karslioglu. I’m a technical writer at Pachyderm. Thank you for coming today. And happy International Women’s Day to the ladies in the room. How many of you have done some data science, machine learning? Okay. Cool. Thank you. And how many of you are familiar with Docker and Kubernetes? Cool. So today we’re going to talk about reproducibility, what it means, and why should we care? Okay. So these days we see an increase in the use of AI in every industry. We see AIs being used in healthcare, HR, automotive, many other areas. Enterprise growth has been skyrocketing in the last few years. There are a bunch of AI courses out there. Private universities and public universities are offering all kinds of programs for data science and AI courses together with a bunch of boot camps. And every day more and more people are enrolling in all kinds of AI machine learning programs to obtain the data science degree. And we all seem to be approaching the future powered by intelligent machines we all have been dreaming about. And these machines are going to do everything for us while we’re going to just enjoy our time… or will we?

A Brief History of AI

In the last few years, a number of AI startups skyrocketed as well. So let’s take a step back and look at the history of AI first in the 20th century, although for some of us, AI is a new thing. AI as a field of study has been around for a very long time actually. And the Dartmouth Summit Research Project in 1956 was considered the founding event. After which there was a series of AI successes and failures, including the creation of checkers program that eventually beat its own creator and the unification algorithm and many, many other things. We all know that success of any industry really depends on funding. And then initial results were not delivered. Investors kind of lost their interest. But that gave time for data scientists to devise new methods that were much more mathematical. Statistics, and probability theory became widely deployed. And what else happened during that time is that hardware became much faster and cheaper. Some AI algorithms are very computationally intensive, such as image processing.

So this all brought us to 2010s when speech and image recognition technologies thrived, which created a new wave of AI excitement. Sorry. Oops. So AI machines became much smarter and much stronger. And in 2017, we had another breakthrough with the AlphaGo creating an algorithm that was able to beat the world gold champion. And some people even thought that that event means that it’s the time when the general purpose AI can be invented as well. Have you guys played Go? So if you guys are not familiar with Go, Go is much, much harder than chess. Chess only has 400 possibilities, while Go has more than 100,000 of possibilities. So that was a big step. So obviously, data science is again the hottest topic. With the number of open positions for data scientists, thousands of people are working to make our lives better, including probably you. But can these algorithms also be wrong?

Emergent Technology and Early Missteps

Over the last decade, we’ve seen a few whooping AI failures that all started as promising projects. So we probably all remember IBM Watson. That was an ambitious Microsoft project in 2014. Watson was supposed to revolutionize healthcare. In a few public demonstration, Watson was given a list of symptoms and came up with a list of possible diagnoses with a remarkable accuracy. Unfortunately, the results that Watson was providing when tested in real life were very far from being accurate. Its oncology diagnoses were completely off. And the ultimate reason for this was because Watson’s data scientists didn’t have access to real data. They had to come up with a synthetic data set, which is an extremely difficult task that can easily go wrong. Like in a math problem, if the first step is wrong, all the rest are automatically wrong. In the end, from the glorious promise of becoming a superintelligent AI doctor, Watson has been reduced to a humble role of an AI assistant.

Another example of an AI failure is image recognition. One of the experimental models at University of Washington was designed to browse through images and identify whether the image had a husky or a wolf pictured on it. While the model was seemingly predicting the results with 90% accuracy, over time data scientists understood that the model was identifying some of the images incorrectly, and the model was actually not identifying huskies or wolves, but the snow on the pictures, because most of the huskies were pictured on the grass and most of the wolves were pictured on snow. This example shows that even if you think that your algorithm is working correctly, it might be doing a completely different thing from what it was designed to do. And of course, bias is a big problem of any data sets.

When Machine Learning Reveals Patterns of Bias

So, for example, in some languages, including Turkish language, the word O can mean both she and he, depending on the context, and then the context is ambiguous. The system chooses to reflect what is already a gender stereotype in the society. For example, Google Translate translated the phrase he/she is a scientist into its masculine form and the phrase he/she is a secretary into its feminine form. This is how bias can sneak in into a data set that is not overall bad, and such bias cannot be predicted. So this is just a few examples, but they all have a common pattern: The data set that the data scientists use was either incomplete, artificially created, or biased. Today, when AI is becoming instrumental in the decision-making process across multiple industries, we need to make sure that we have established standards to control the quality of AI systems. Every day we hear that AI is hurting people, breaking their lives. It would be extremely difficult to recover from something like that, and no one is protecting them from this.

Seeking Reproducibility

Another side of the problem is that a large number of studies cannot be reproduced. The Nature magazine conducted a study which revealed that over 70% of scientists cannot reproduce results of other scientists and even their own. They can’t reproduce their own experiments. And most of the scientists agree that the main reason is that the data is cherry-picked to support the hypothesis. We see similar problems and tendencies in data science for the same reasons, and I think this is very scary.

To help improve transparency and reliability of data science experiments, the following seems to be essential:

- The quality of analyzed data must be improved. While trying to improve a hypothesis, it might be tempting to cherry-pick data, organizations must have a robust process that verifies that the data that is being used to train an algorithm is complete, unbiased, and diverse.

- The sample size must be large as well. The number of published AI papers grew from just a few of them in the ’90s to more than 15,000s in 2015. And this number keeps growing. Because of the hype, it is hard to keep up with this amount of papers and understand which of them are truly scientifically important and which are not. The number of trials in many of them is shockingly small.

- The tooling must ensure that there is an audit trail available to other scientists to recreate the same results. Well, of course, some of the AI implementations are not ideal, but we cannot really live without AI. On a large scale, AI significantly improves the quality of our lives. The current state of AI infrastructure is now mature enough and needs production-ready solutions and processes to reach the level of stability and accuracy we need.

So, what would help data scientists to better track their experiments and correct them as needed on a regular basis? What kind of system can help to ensure that you can always go back and fix a biased algorithm? And what should people who consume the results of the AI work demand from the AI platform providers?

Establishing a Machine Learning Lifecycle

If you look at software development, they’re is a lifecycle, right? So we have all the stages that are pictured on this slide. The software lifecycle includes multiple interactions of planning, developing, testing, releasing, and maintaining the software. Tools like version control system and continuous delivery became natural for any software development team. Data scientists deserve to have the same level of reliability. Data scientists need a system that can track versions of data sets used to train their algorithms. A version control system, similar maybe to Git that tracks data set changes and the provenance of their results. Unfortunately, Git cannot really solve this problem because Git has a file size limitation, and AI data sets are massive, sometimes with gigabytes and petabytes of binary data.

So what does a reasonable data science solution look like? Today, we hear this new term more and more often, MLOps or AIOps. The goal of MLOps is to provide a reliable infrastructure for reproducible and verifiable data science. And at Pachyderm, we have built such a platform. If we think about software development as a pipeline, this is probably how it looks like. Developers write code. They store it in a version control system. They run their test, and then they deploy them to production, and then they do the same thing all over again. And if we think about data science pipeline, this is how it may look like. So the data scientists would acquire data, clean it, train it, test it, and then deploy it to production. So this is high level, but more or less this is what it is.

Why Pachyderm is Essential to MLOps

So what is Pachyderm in a nutshell? It is those three things on the slide. It is data versioning, data pipelines, and data lineage. Pachyderm operates with concepts like repositories, branches, commits that are familiar to everyone who use Git or GitHub or Git-based system. Pachyderm enables you to– I’m sorry. Pachyderm enables you to manage and version control your data in much the same way Git does for code. In Pachyderm, you can version control petabytes of data with many of the same commits that are immutable snapshots of your data. And then you can also see what happens to that data set over time. On this slide, we have a repository that is called Images, and it has a branch master. And these are all the commits that were submitted into that repository. You can access those commits at any time by that hash number. Very similar to Git.

Data pipelines. So data pipeline is another Pachyderm concept. Not only do you need to version your data, but you also need to transform and process your data. Building multilevel transformations against your data is fundamentally important. In Pachyderm, we make pipelines completely Kubernetes native. Every pipeline is a Kubernetes port. A pipeline can run your containerized code against data that you put into your Pachyderm repository. It can use any languages and libraries you want because it’s a container. It does not matter what type of data science you are doing. It doesn’t matter which industry you work in, whether you are doing bioinformatics or genomics, or you’re using some special library. Whether you write in Python or R or Go, it does not matter because all it goes into a container.

See Every Intermediate Step of Your Data’s Transformation

Your pipeline puts the results into an output repository. So not only you have your input data version, you also have your output data version as well. Every time you put new data into a Pachyderm repository, Pachyderm triggers a job that runs that pipeline against the new data. So you can see here on the slide, we have a DAG for– actually, I think it’s a hyperparameter tuning example where we have the parameters repository. We have our model. We have the split step. We have the test step, and in the end, we select the best model out of them. Now that we understand that we can have multiple pipelines chained together, and we can have multiple repositories, this bring us to our last concept, which is data lineage. Have you heard this term before? Data provenance or data lineage? Yeah.

So data lineage helps you to understand where data originates in and what happens to it over time and how you got the present result. Data lineage in Pachyderm traces your data across multiple repositories. You have many versions of your data, but you also have many versions of your code. Data lineage enables you to look at results of your data pipeline and then see every version of data and code that led you to that result. And not only that, it shows you how every version of data relates to every version of code. And this is crucial for reproducible data science. Data lineage is a property of your data. It’s important not only to version your data, but also to understand its origin. And this is what data lineage gives you. So if you can see here, every commit in Pachyderm has this little field called provenance, and you can see every single pipeline that contributed into that result so you can check how you got there.

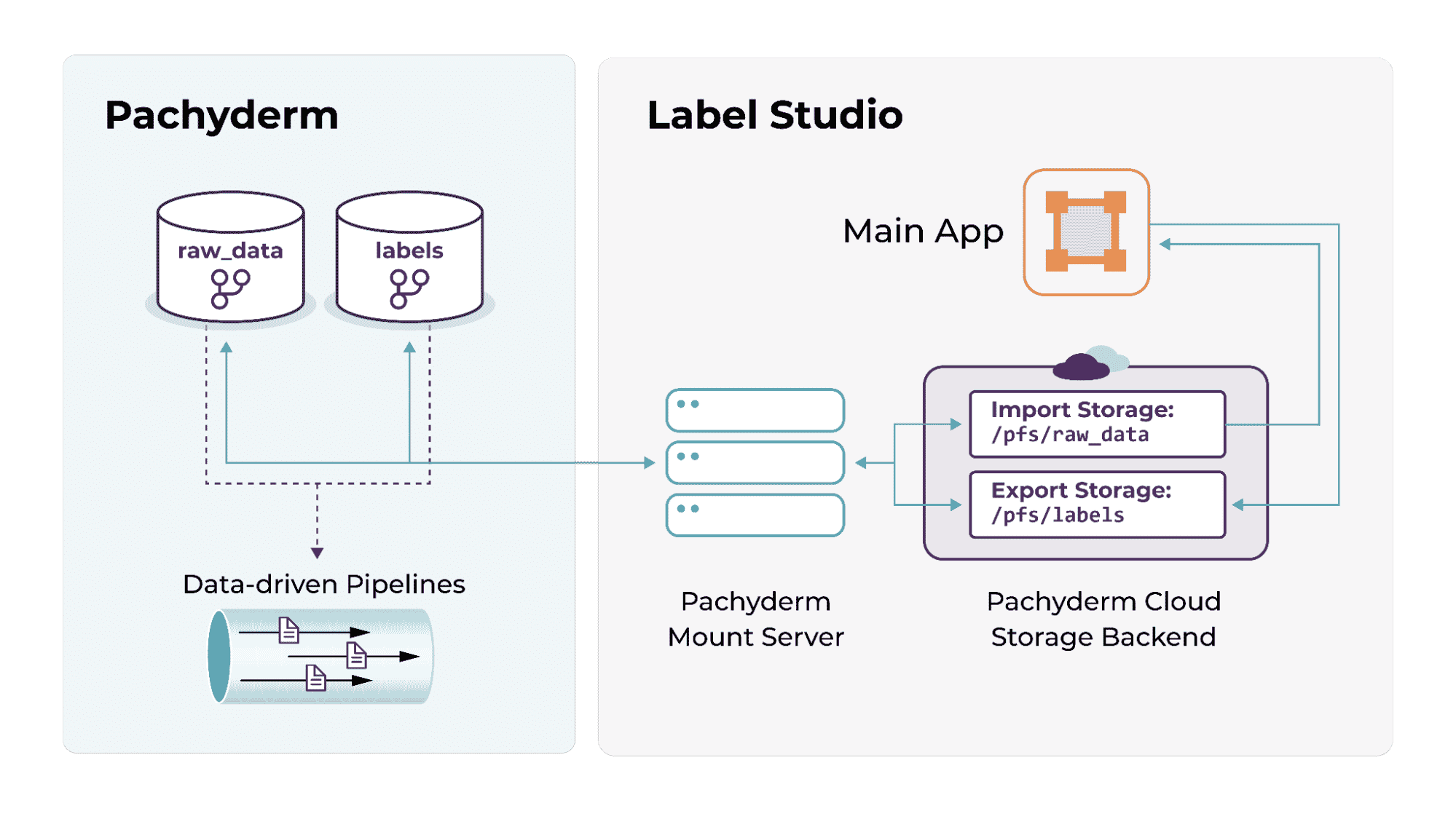

All right. So the last thing that I want to show you before we jump into a demo is the overall Pachyderm architecture and how it relates to the broader ecosystem. Pachyderm runs on top of Kubernetes, meaning that it can be used in all major cloud platforms like AWS, GKE, Azure, as well as on-premises. And it provides all the benefits of a containerized application, which includes , self-healing, resource isolations, and many others. And we do work with major data science tools such as JupyterHub and Kubeflow. And we really closely work with folks from Seldon and Comet ML. And the very last thing for those who are not as familiar with Kubernetes, but who still wants to keep track of data version and provenance, we have recently launched a hosted version of Pachyderm, which is called PachHub. So make sure to check it out. You can run your experiments online as well.

Pachyderm Demo: Reproducible Data Pipelines

And for today’s demo, I’ve chosen a very simple example of data detection– oh, I’m sorry. Edge detection with the OpenCV Library. So if you are interested to try out this example, it can be found on GitHub in our repository under Examples. Basically, we have two pipelines here, which is Edges pipeline that performs the edge detection and Montage pipeline that uses ImageMagick library to create a montage of the images that we have. And we use a very simple script that we wrote in ImageMagick Montage command. And this is what it’s going to do. So each detection basically going to take this picture and turn it into this picture. So this is how the pipeline looks like in Pachyderm.

As you can see, we have repository called Images that we’ve seen before on other slides. We have a transformation section where we specify our docker image, which is in this case, Pachyderm OpenCV. And we have a command that we execute, and it’s Python 3 that executes the script that we wrote. This is a very simple script. They basically does the edge detection and then go and performs it for every single file that it finds in the repository. Yeah. And this is our Montage pipeline that basically– so this is a bit of a different technique from the other one. Here we combine inputs from two repositories. One is the images repository. And one, the results of the Edges pipeline. And this is our transformation step that basically takes the Montage command from Docker image and creates the image with the following parameters. All right. Let’s do the live demo.

So I already have a Pachyderm cluster running on my laptop. So if you are familiar with Kubernetes, you can do Kubectl get pods.

All right. Sorry. So you can see we have three pods, and they’re all Pachyderm cluster pods, which is a PachD is a server, and Dash is the UI, and SCD is the SCD Value Store. Okay. So first thing we’re going to do, we’re going to create a repository that we’re going to call Images, and if you do [inaudible] list. I’m sorry. So this is our repository that we created. Next thing we’re going to do we’re going to put an image into that repository which is stored in here, and we’re going to call it Liberty. All right. I’m going to [inaudible] really quick. And now we can see our repo right here. We can see that we added 57 kilobytes into that repo, and we can also check the commit. So as soon as we add an image, there is a commit added for it as well. And you can also– yeah. And you can also see the file itself in the repository. And if we try to open it right here– I’m sorry. It opened on my– let’s do it once again. Yeah. Just open it right here. All right. And next step will be– the next step we’re going to create– in our next step, we’re going to create the pipeline edges from JSON file by using this command. It’s pachctl create pipeline minus F in the path to the JSON file. All right. And we can run pachctl list pipeline. We can see that our Edges pipeline is now starting. And we can also check the jobs. Okay. For some reason, the job is not there yet.

Interesting. For some reason, our pipeline– okay, let’s see. I guess it’s going to take some time to run the job.

Okay. And if we go into our UI, we can see that we have the images repository and the images pipeline right here and the output repository that was automatically created to output our results in here. All right.

Yeah. For some reason, it’s not– okay, so now we have the job right here. Okay. And it’s right here right now. So we have 22 kilobytes added in there. Okay. Now we’re going to add a few more– we’re going to add a few more images in here. We going to add AT-AT png, and we going to add kitten in there. Okay. And if we do pachctl list commit images@master, you’re going to see all those images being added. And let’s check the job as well. So yeah, it ran all the jobs for that pipeline. Okay. And lets our Montage pipeline. All right. Okay. The job did not [keep?]. Oh, no. All right. Okay. It run the Montage pipeline and the job succeeded, and the pipeline succeeded.

Yeah. And we can get this image. We can get our Montage png. Okay. So it’s opened on my screen, and this is what it looks like. Yeah. UI, for some reason, I don’t know why it’s not working right now. But let’s check once again. Yeah. So it added the montage to tag and shows all the recent jobs and the repository. And we have three data files in here that you can see they were added. And the montage is here as well. Yeah. Yeah. So this is pretty much– if you want to try this example – it’s our actually a beginner tutorial for Pachyderm – join our Slack channel, check out PachHub and let me know if you have any other questions. [applause]

So first question is, I guess your presentation is going to be out on the site. Right?

Question: So one question I have is, so when you generate the hash and you have a huge amount of data, that will just take a long time, right?

Svetlana: Probably it will take some time. Yeah.

Audience: So if you have 500 gigabytes or whatever, if you’re going to use this, you have to set up the proper infrastructure.

Svetlana: So in your pipeline, you can set up parallelism for your– you can have multiple Kubernetes workers that would spread the workload, basically. And we have a concept of datums, each piece of your data. So if you notice in the pipeline, there is a field called glob that lets you break down your data into chunks. So it will process chunk by chunk. There’s a way to just the one hash.

Audience: So any change you do similarly, you’ll have to regenerate that hash.

Svetlana: I think so, yeah. Yeah. So again, you can find units with the glob pattern that if you have– for example, you have multiple directories and multiple files in those directories, you can specify that with the glob pattern that if you make one tiny change in one file in there, it would only process that datum that that file represents.

Question: I have a question. How well integrated are you to AWS or some other cloud provider? Like, if I want to store my data on S3, or if I want– how would I fire up EC2 instances to feed it? Like I do have 500 gigabytes I process a day, so how would I use this for this?

Svetlana: You can run it on EKS. We have examples for that. You can store everything in your S3 buckets if you want to. It’s pretty seamless integration with all three major cloud providers: AKS, EKS, and GKE. Yeah. You can use MinIO as S3 client to– MinIO I think S3cmd and–

So any S3-compliant target can be used too.

Question: It’s probably all up on the site. But what’s the infrastructure that you have to have in place? Is it just one binary? I assume not. So if I want to go and deploy this and use this and so forth, is there anything I need besides whatever you package up?

Like how many nodes [inaudible]?

Yeah. Any kind of config, or is it just push button?

Svetlana: So when you deploy Pachyderm using our pachctl client, it generates a Kubernetes manifest that you can deploy anywhere you want by using Kubectl apply, basically.

Question: Will this run on a single node cluster?

Svetlana: Yeah. So I’m running right now on minikube, basically, with the minimum.

Question: How does this compare to other– like there are many solutions like this. Yesterday we were hearing about Spark and Delta Lake, which sounds very similar to this. Can you provide a compare and contrast of this versus other data pipeline solutions?

Svetlana: I’m not familiar with the one you mentioned. I know that we can work with tools like Kubeflow, for example, or Airflow or JupyterHub, and none of them provides data versioning. They do provide pipeline infrastructure, but not really data set versioning.