As long as there is data, the data warehouse will continue to reign supreme as the de facto tool for analytics and business intelligence.

But the traditional data warehouse doesn’t cut it for today’s ever increasing volume and variety of structured, semi-structured, and unstructured data. Data scientists are realizing they need a modern approach to store, query, and analyze data. They need something built for the cloud that separates compute power from storage.

They need the ability to independently and elastically scale storage and compute.

And that’s why Snowflake has rightfully earned the hearts and minds of data professionals everywhere. It provides a simple storage approach and separated compute for complex queries. It’s these qualities that make it the best tool for scalable business intelligence and computationally intensive analytic workloads.

SQL is awesome, simple, ubiquitous and used everywhere. But, SQL was not designed to do complex processing. The solution could be to create some crazy SQL or use a language designed for complex data analytics like Python or Scala.

Data Warehouses Need Better Tools for Machine Learning

SQL is synonymous with querying and manipulating data in any data warehouse.

But have you ever tried to do anything more complicated than a series of SQL manipulations in Snowflake?

Whether it’s trying to do complex data transformations or build a machine learning model, you’ll soon find that trying to do that involves hacking together cumbersome, home grown solutions. You’d have to export data from your warehouse. Then you’d have to figure out which custom framework you need to adopt to process your data, whether it’s using a Python connector or some Java user-defined function. These workarounds have limitations like short processing times (>1 min) and limited language support (Python, Scala, Java).

This type of a pattern doesn’t work well for fast-moving, agile data science teams.

Data science teams require a reliable way to move data from data warehouses into flexible computation engines. This way you can run your machine learning code and more process intensive tasks. There are some tasks that require a lot more than structured data and SQL queries.

For those tasks you require support for unstructured data and general purpose programming languages.

Imagine that you’re trying to enrich your structured data with insights from unstructured text, image, audio or video data. SQL wouldn’t be much help. A task like that is best suited for programming languages and libraries typically written in Python, R or Scala because it’s impossible to do that type of computation with SQL.

In an ideal world you’d want the ability to easily combine that data you need to make informed decisions and use the right tool for the job.

Go Beyond the Limitations of SQL with Powerful Pipelines

The ideal situation would allow you to apply whatever logic you want to your structured and/or unstructured data in three simple steps.

First, you’d want to import data from structured data sources like Snowflake and unstructured data in your object storage into a computation engine. Second, you’d want the ability to apply programming logic to your data. And finally, you’d want to be able to export your results back to Snowflake.

Teams looking to access and run complex machine learning workflows on top of their data warehouse stack must build their own custom infrastructure.

We’re excited to announce our new integration with Snowflake, which makes it easier for you to do just that. Our integration allows you to:

-

- Integrate with Snowflake for easy data ingress and egress

- Orchestrate long-lived, multi-step, data-centric pipelines

- Run pipelines using any language (Python, R, Scala) or library

- Combine structured data warehouse data with unstructured data from sources like object storage (S3, etc.)

- Reproduce your workflow via full model lineage (code and data)

- Scale to petabytes of data while minimizing costs

Data Versioning is a MUST for Machine Learning with a Data Warehouse

A huge complaint of data scientists is that it’s not easy to version control your data warehouse.

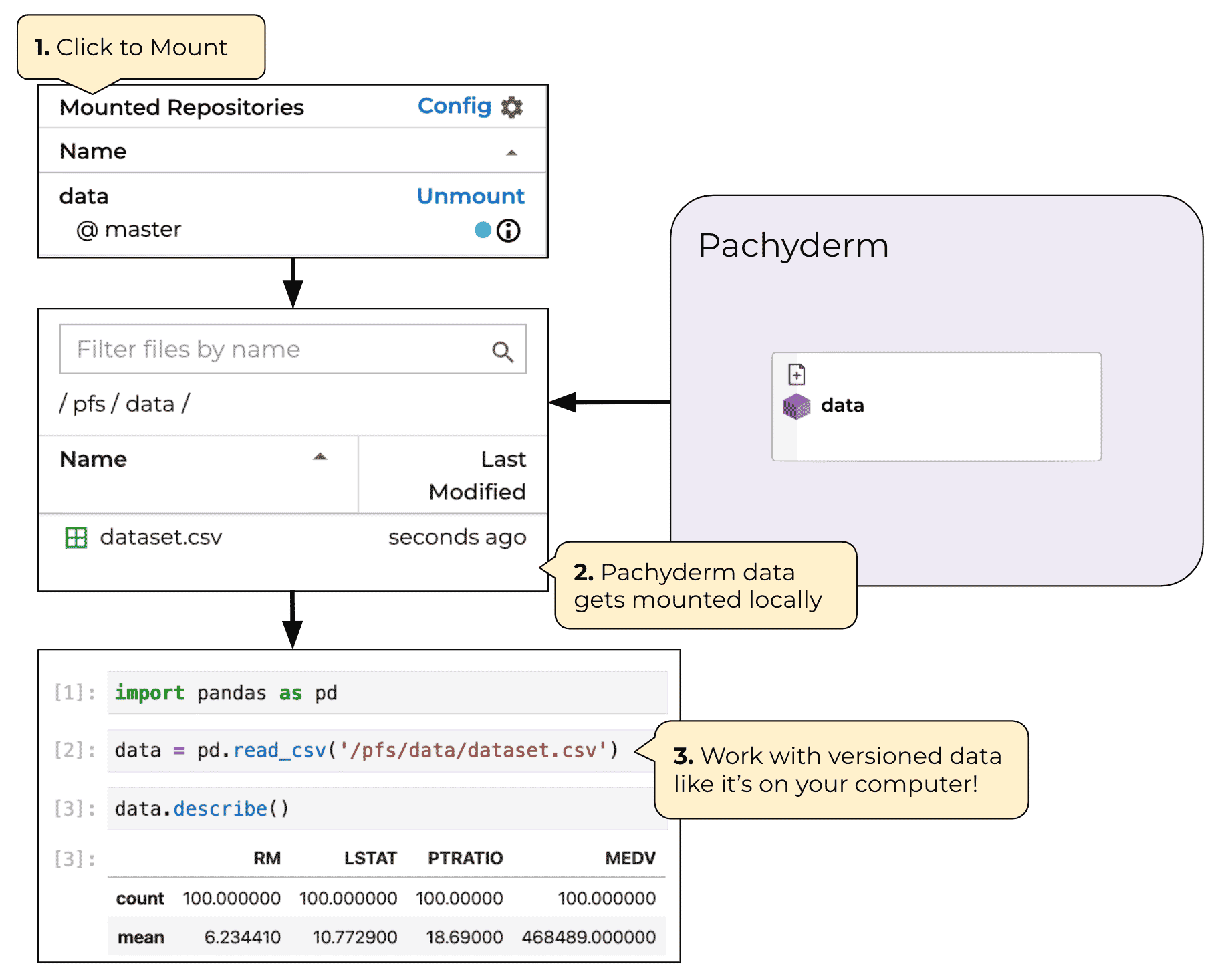

Ideally you want your source datasets and the outputs of your machine learning models to live in your data warehouses, and still enjoy the benefits of retaining model lineage. With Pachyderm everything is versioned. As soon as you import your data from Snowflake and commit it to Pachyderm, it is automagically versioned for you using git-like immutable commits to a repository.

How does that work?

You start by ingesting your query results as CSV files into Pachyderm. Then you commit the CSV file to the appropriate repository in the Pachyderm File System. Pachyderm then stores the history of your commits in a centralized location, allowing you to travel back in time and see the state of your query at any point in the past.

Ok, so what’s the big deal about that?

Now when you train a model you’ll always know exactly which snapshot of the data you trained on. New data causing some breaking changes to your model? No worries! You roll back your data and retrain your model on a previous snapshot if your newest model isn’t performing well.

Alright, that’s enough of the marketing fluff.

Snowflake Machine Learning Pipeline Example

Let’s see how all this works in a simulated real word use case: churn prediction.

You’ll use data from KKBox’s subscription based music streaming service Kaggle competition to understand how changing the definition of churn via upstream business processes can make you wish you had version controlled your data.

The hardest part about identifying customers who churn isn’t the machine learning model itself, it’s figuring out what you mean by churn.

This comes down to clearly defining your problem statement, which is an iterative process.

In this example, you’ll use Pachyderm to assess the impact domain drift has on changing churn definition and model performance. Using Pachyderm to create multi-step language agnostic pipelines, you’ll learn how to:

- Ingest data from Snowflake via the Pachyderm data warehouse integration

- Use Pachyderm to build language-agnostic pipelines that perform complex data processing steps like:

- Creating a data visualization pipeline in R

- Build a feature engineering pipeline in Python

- A model training pipeline in Python

- Make churn predictions and push them back to Snowflake

- See how you can rollback to a dataset that had a previous definition of churn and automatically

Next Steps:

- See the code on GitHub

- Request a demo to see it in action

- Watch our webinar