What does AutoML even mean?

At first, it seems like a simple and straightforward term: Automated Machine Learning.

Simple, right?

It’s basically the process of automating your AI stack.

But dig a little deeper and you’ll see it means a lot of different things to different people.

Big cloud providers are pushing the idea that AutoML is the AI equivalent of a drag and drop editor for websites. Just click and poof, all your data is cleaned, standardized and formatted nicely for training. Click again and zap, you can pick from well known algorithms and train away.

But the truth is, we’re not there yet in 2020. Not even close.

We’ve got a long way to go before AI is a baked solution that any non-data scientist can roll into their applications and code with the ease of a low-code platform or a WYSIWYG editor.

While we’ve certainly made some heavy progress in the last decade in AI, especially with computer vision and natural language processing, there aren’t many perfectly baked solutions with an easy to follow recipe. You can go on Rosetta Code and find a dozen ways to do a bubble sort, but we’re not anywhere near an automated machine learning solution that delivers ten ways to do sentiment analysis that’s actually all that useful in your app store app.

Where does that leave AutoML in 2020?

We’re in a much earlier stage of automating the AI/ML infrastructure stack and that’s perfectly all right.

It took decades before very strong drag and drop editors developed for websites, along with a standard platform for hosting those sites like WordPress. Before that, website design was a highly specialized skill that required coding, Photoshop, graphic design and more.

Of course, stunning web design is still a highly prized skill, but today a decent designer with a moderate skillset can create a beautiful website with ease. I whipped up that Practical AI Ethics website in a few days with the awesome Divi theme and WordPress, because the process of building incredible websites is a baked solution and the coders did the heavy lifting for me. We’re just not there yet with AI.

In many ways, AI development feels like it’s almost a decade behind where traditional coding stacks or web design are today. Agile, DevOps and CI/CD are highly evolved programming paradigms that allow small teams of coders to scale rapidly and build applications that support 100s of millions of users. It would have taken a massive programming team with home grown infrastructure software to support that kind of effort even twenty years ago.

AI/ML development is still a highly skilled and specialized endeavor. Instead of looking for a drag and drop AI designers, today’s AI dev teams need to think more about automating the day to day tasks that eat up 80% of their time, like cleaning and transforming data.

Take Epona Sciences, a company that specializes in buying, breeding and discovering the best race horses in the world. With every thoroughbred a multi-million dollar investment, the stakes are super high. Epona set out to revolutionize an industry steeped in ancient tradition with machine learning, statistical analysis and science. Along the way, they discovered that everything from the horse’s entire genetic profile and lineage, to the animal’s height and gate, to the size of its heart can all make the difference between a winning horse and one that never really gets out of the gate.

But getting and processing all that information isn’t easy. They need to scrape it from websites, pull X-rays and genetics data from different companies, pull photos and videos from other sources, along with weather and race information from yet another place. Even worse, they found that many of the cutting edge AI toolsets and frameworks they wanted to use were built by researchers, not enterprise software developers. That meant the software was rough around the edges.

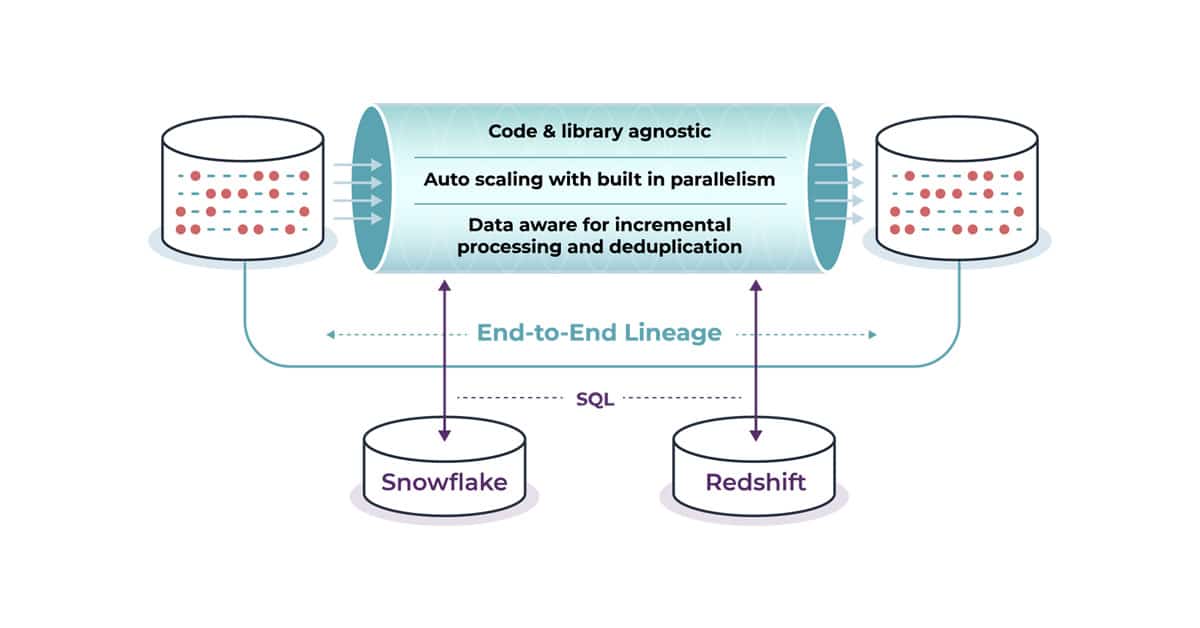

They were spending weeks just cleaning and formatting that data, with lots of manual steps and glue code to keep it all together. They tried building their own automated pipeline but then they turned to Pachyderm, the automated pipeline and data versioning platform for big time machine learning. It let them go from weeks of manual steps to getting all that data cleaned, transformed and formatted for their customers in 15 minutes.

That’s the key to automating your MLOps stacks today. You have lots of languages, tools and frameworks that all need to work together. Most of them are super rough around the edges. Pachyderm makes it easy to bring them all together like beads on a string. If you can package up the code and the tool into a container you can use it in Pachyderm.

At each stage you simply define a little YAML or JSON code that calls a script or a tool in the next stage. Pretty soon, you’ve stacked together all those manual steps into smoothly running, automated steps. You’re spending a lot less time on the heavy lifting of sourcing, cleaning and fixing data and more time training your models or coming up with novel algorithms.

While truly drag and drop, automated machine learning is probably a decade or more away, you don’t have to wait to start automating much of your current pipelines. Pachyderm lets you get rolling today.

Lots of little manual steps create all kinds of errors. Forget to do one little part of it and it has cascading effects down the line, introducing errors into models or just forcing you to start all over. Even worse, it’s super time consuming. The more manual steps the longer everything takes.

If you’re working in AI today, focus on automating a little bit lower down the stack and you’ll see a big boost in productivity and production. The faster you can get that data from its original source into your pipe, that faster your special forces core of data scientists can do what they do best:

Building amazing models.

With Pachyderm you can build models that matter instead of wasting time cleaning and processing data.

Focus on what you love to do, automate everything else and the rest just flows.