As healthcare faces unprecedented staffing, patient management, and operational challenges, manual, disconnected data processing and reporting are yet another roadblock to improving the quality of care, balancing capacity, and driving better patient outcomes. But what does it mean to revitalize healthcare? First, it means shifting organizational data management policies from one-size-fits-most to a comprehensive data governance approach that uses real data and analytics to find treatment bottlenecks, manage equipment maintenance schedules, and more.

Because every healthcare organization has different data and analytics needs, there is a breadth of opportunity for experimentation and innovation. This article will discuss the current state of the healthcare industry, how data analytics are driving disruption, and how data pipelines and analytics are used in healthcare.

Healthcare Growth is Exploding

The healthcare industry is an 808-billion-dollar industry, growing at a rate of 4.29% yearly. This fast-paced growth presents an opportunity for innovative companies to use data-driven pipelines to improve outcomes in the industry.

The healthcare industry touches everyone, so anyone who has ever had an illness or injury knows firsthand how complex and expensive our current system can be. This complexity makes it difficult for stakeholders to work together to improve patient outcomes. However, by leveraging analytics from clinical data sources, providers can extract the insights they need for value-based care.

Data and Analytics are Key to Harnessing Growth Potential

The rapid advancement of technology has led to an explosion of data. Healthcare organizations must figure out how to harness this opportunity for growth and innovation if they want to remain relevant in a rapidly changing industry.

In healthcare organizations, analytics pipelines access data from storage sources like data warehouses. They may perform standardize and transform the data before delivering it to users via dashboards, reports, and internal tools. These tools can improve quality of care and time efficiency, like service time predictions, reporting dashboards, and optimized scheduling.

For more advanced organizations, algorithm-based predictive analytics and machine learning can help departments and leaders improve quality of care, capacity usage, and staffing coverage.

Every Healthcare Organization Has Different Needs When It Comes To Data And Analytics

Healthcare analytics pipelines are not without their challenges, however.

One of the biggest challenges of healthcare analytics is ensuring that you have the right tools to support your organization’s changing needs. In addition, as an organization grows and adapts, your pipelines and analytics will need to change and scale with you.

If you’re having trouble managing existing data pipelines, one of the first things to do is take a step back and consider what your current channel looks like and how it could be improved. Your data needs are not static; they will probably change over time as new sources emerge or existing ones become obsolete. To ensure that you’re prepared for these changes, your pipeline must be flexible enough to integrate new data sources into its processes easily.

Other challenges for enterprise data pipelines include:

- Delivering accurate numbers to the right teams

- Putting data analysis in the hands of decision-makers

- Understanding how data changes between ingest and output

How Are Data Pipelines And Analytics Used In Healthcare?

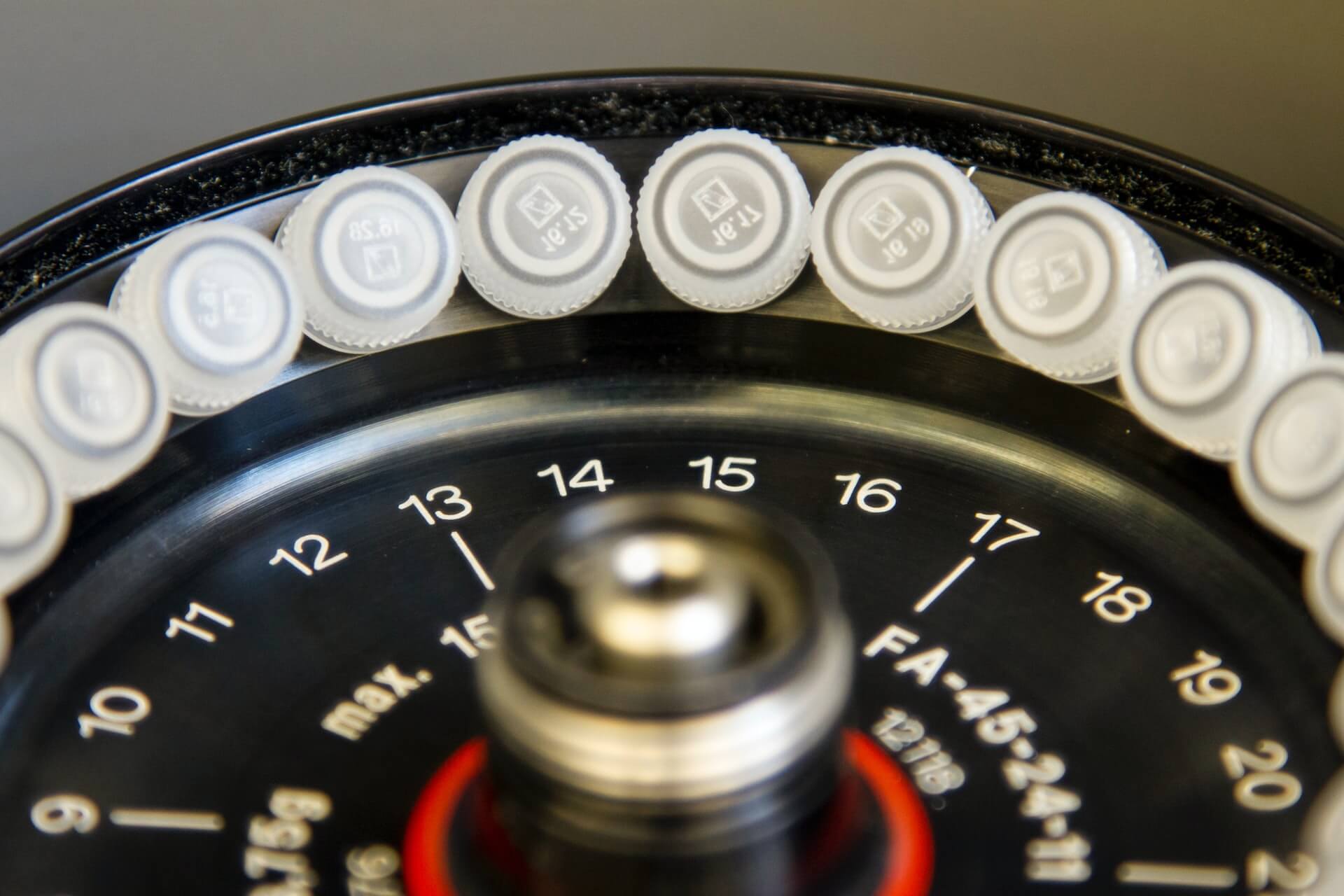

Equipment Monitoring, Maintenance, And Following Long-Term Value Of Investments

Hospital asset tracking is one example of how healthcare analytics is used in healthcare. Tracking the lifecycle of expensive equipment and its consumable usage rate can help operations teams understand the maintenance schedules, anticipate and avoid outages of critical equipment, and look back at the lifetime value of equipment in a healthcare setting.

With flexible data pipelines delivering device analytics in healthcare, care delays due to equipment failure can be reduced, and decisions about future procurement can be data-driven.

Surfacing And Delivering Patient Data And Treatment Performance With Compliant Architecture And Data Versioning

Adapting, repeating, reusing, and scaling data pipelines to experiment with patient scoring, treatment patterns, and patient trends can require significant engineering time to rebuild pipelines without a flexible management and monitoring platform.

Using automation and leveraging incremental changes can make machine learning faster than ever before in healthcare settings. Scalability and the ability to make small or gradual changes are essential for organizations using on-premise technology systems due to privacy and security needs.

What Factors Slow Down Healthcare Pipelines?

Healthcare analytics is a powerful tool for improving the quality of care and reducing costs. But the process can be slow, especially in larger healthcare organizations.

The following are some factors that slow down healthcare analytics pipelines:

Data Access

The presence of sensitive data and legacy systems both hinder access to the data needed for pipeline applications, which keeps these projects from getting off the ground.

Dataset Sizes & Data Awareness

When dealing with thousands of patient records, imaging data, and multiple data storage sources, data-driven pipelines can intelligently process only new and changed data.

Data-driven pipelines also allow for incremental action, critical for efficient workload scaling and load balancing.

Lack Of Automation

Manual steps in self-built data pipelines reduce efficiency and introduce human error into an automated process. Conversely, automated pipelines minimize the likelihood of error and can include self-healing routines.

Architecture Management

Pipeline systems that are not planned and built carefully are more likely to have errors, slower delivery times, and other problems. Allocating more processing power to a slow data pipeline won’t necessarily improve the speed at which you can iterate and deliver your data.

The Health Analytics Guru emphasizes this point: “Throwing more storage and processing power is an expensive, lazy and temporary solution to poorly designed data architectures.”

Privacy And Data Storage

Finally, because the Health Insurance Portability and Accountability Act (HIPAA) compliance regulations and technological changes are so complex, healthcare data in various storage locations may need to be processed on-premise, underscoring the need for efficiency and scalability.

The Right Data-Driven Pipelines Make Healthcare Analytics Faster

When processing large datasets, data-driven pipelines can see beyond the entire dataset and process workloads based on the needs of each datum: is it new or changed? What is the best worker to assign this to? This functionality offers a significant resource benefit.

Data-driven systems can also leverage incremental processing, which is enabled by combining Kubernetes with your pipelines.

With data version control, healthcare analytics pipelines have the complete lineage of their datasets and all transformations made through the pipeline. This makes debugging faster and easier for data operations teams, ensuring the suitability of data practices.

The healthcare industry is changing, and it’s changing fast. Data and analytics are at the heart of this transformation and will continue to drive progress in the coming years. Combining version control with data-driven pipelines and incremental processing with a tool like Pachyderm enables healthcare providers and business teams to track more information and turn it into actionable insight faster than ever.